Conclusions drawn from the discussion with Lee Hibbard – 9th of November (EAVI Conversations 2021)

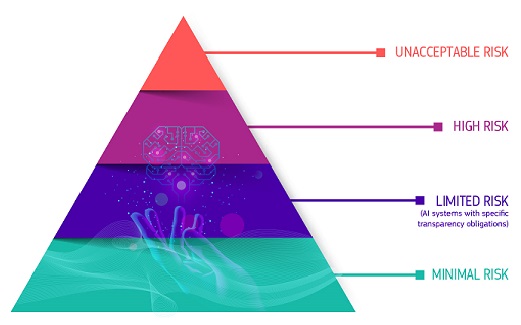

Artificial Intelligence is not a simple topic to address, neither its’ meaning nor its implications. That is why, when it comes to its definition, most users are puzzled. No wonder that only 15% of the event participants were confident about what AI entails. Despite its large use, people are not aware of how it works. As Lee Hibbard put it: “If we don’t know what AI is, maybe we don’t know it”. The overall feeling around AI is uncertainty, especially about the consequences this technology has and will have both on individuals and societies. This is even more true if we think about the fact that in this period usually called the digital revolution, technology is developed before any recommendation or safety measures to counteract its impact. However, this is not a new trend. If we went back in time, we could discover that when the first cars were introduced into the market, they didn’t have seat belts. If we think about it today, this doesn’t sound real, although it is: seat belts did not come along until later. Was it a testing phase or were people unaware of the risks? This example clearly illustrates the current circumstances.

This is a trial period for AI. There is no doubt that we do not make decisions based on what we know, and we do not interact with AI considering all the risks. It is a common belief that as technology progresses faster and faster, we all pursue everything that seems to be more efficient and cost-effective, no matter the consequences.

There is a powerful question we tried to address during the session: “Was it better in the old days?”. The truth is that there is no point in going backwards. As ICTs are part of us and serve multiple purposes during our daily lives, we need to look ahead toward the future, especially because it is not anymore a choice whether to use or not technology, social media and devices, but an obligation. Looking back will not solve any of our problems. We should accept that we are where we are and embrace both the challenges and the benefits.

When we question the role AI plays nowadays, we cannot ignore that big companies have a major role to play. They do not seem to have made a formal commitment to respect ethics or human rights. However, to play fairly, we cannot forget that it is in a business’ DNA to make a profit. Tech companies focus on surveillance capitalism that keeps us glued to the screen because at the end of the day this is a winning business model. It is difficult to recognise that maybe corporations do not have a plan to destroy people’s lives; that’s what they do, it’s just people doing what they do. Lee Hibbard raised an interesting point here: “There are no enemies when it comes to technology, it is just a matter of defending us from ourselves.”

One important aspect was highlighted multiple times: individual responsibility. People are aware we are offered free services but do they reflect on the reasons?

Behind social media there are algorithms, and it’s common knowledge that they strive to keep our attention for as long as possible, whether we encounter negative or positive content. They create ties between people and devices that can seem like salvation to some. They help us build online networks resembling our societies, powerful enough to affect our feelings, actions, and thoughts. We are turning more and more into vulnerable human beings. Are we all becoming addicted? Many studies have shown that this addiction is real. The COVID-19 pandemic is a bright example. The infodemic that followed increased people’s anxiety, sense of uncertainty and disempowerment and these feelings worsened as human beings are naturally enclined to be in control of their emotions. Therefore, it is crucial that each one of us does a little bit to translate good intentions into actions and comes up with personal solutions to these problems. You might legitimately ask yourselves at this stage how can a single person find appropriate solutions to technology negative effects. Unfortunately, individual responsibility is only one element of the equation, and it needs to be coupled with knowledge/tools resulting in the balanced and conscious use of devices. It is exactly knowledge people are missing.

Let’s go back for a moment to the car: when you drive it, you need to pass a test and get a driving license, but we do not pass a test to use technology! There might be a common feeling that since it is not going to kill us, that might be no use for it. Is it so? We are all aware of the scandals that happened in political elections because of social media influence, and if social people are not killing human beings, they are certainly killing democracy. These are just hypotheses, unfortunately, there is no magic bullet, and the solution is hard to find. Instead, it is a process that requires time, since many are the topics that should be addressed; regulation being the main one regarding human rights, ethics, freedom of speech. More accountability and knowledge is needed, and much more should be done when it comes to people. Critical media literacy is important for everyone, but it is only a partial solution to a big problem.

Looking ahead, we cannot deny that people are quite optimistic about AI in the future and it might be that, as happened to the cars, safety measures will be introduced at some points and people will pay more and more attention to the risks while benefitting at the same time from its responsible use. Let’s invest in thinking about the use of AI, our responsibilities, first as users and then as citizens, and let’s provide spaces for people to have more discussions, more human connections and a healthier online/offline balance.

Are you interested in watching the different sessions of the EAVI Conversations 2021?

Conclusions drawn from the discussion with Lee Hibbard – 9th of November (EAVI Conversations 2021)

Artificial Intelligence is not a simple topic to address, neither its’ meaning nor its implications. That is why, when it comes to its definition, most users are puzzled. No wonder that only 15% of the event participants were confident about what AI entails. Despite its large use, people are not aware of how it works. As Lee Hibbard put it: “If we don’t know what AI is, maybe we don’t know it”. The overall feeling around AI is uncertainty, especially about the consequences this technology has and will have both on individuals and societies. This is even more true if we think about the fact that in this period usually called the digital revolution, technology is developed before any recommendation or safety measures to counteract its impact. However, this is not a new trend. If we went back in time, we could discover that when the first cars were introduced into the market, they didn’t have seat belts. If we think about it today, this doesn’t sound real, although it is: seat belts did not come along until later. Was it a testing phase or were people unaware of the risks? This example clearly illustrates the current circumstances.

This is a trial period for AI. There is no doubt that we do not make decisions based on what we know, and we do not interact with AI considering all the risks. It is a common belief that as technology progresses faster and faster, we all pursue everything that seems to be more efficient and cost-effective, no matter the consequences.

There is a powerful question we tried to address during the session: “Was it better in the old days?”. The truth is that there is no point in going backwards. As ICTs are part of us and serve multiple purposes during our daily lives, we need to look ahead toward the future, especially because it is not anymore a choice whether to use or not technology, social media and devices, but an obligation. Looking back will not solve any of our problems. We should accept that we are where we are and embrace both the challenges and the benefits.

When we question the role AI plays nowadays, we cannot ignore that big companies have a major role to play. They do not seem to have made a formal commitment to respect ethics or human rights. However, to play fairly, we cannot forget that it is in a business’ DNA to make a profit. Tech companies focus on surveillance capitalism that keeps us glued to the screen because at the end of the day this is a winning business model. It is difficult to recognise that maybe corporations do not have a plan to destroy people’s lives; that’s what they do, it’s just people doing what they do. Lee Hibbard raised an interesting point here: “There are no enemies when it comes to technology, it is just a matter of defending us from ourselves.”

One important aspect was highlighted multiple times: individual responsibility. People are aware we are offered free services but do they reflect on the reasons?

Behind social media there are algorithms, and it’s common knowledge that they strive to keep our attention for as long as possible, whether we encounter negative or positive content. They create ties between people and devices that can seem like salvation to some. They help us build online networks resembling our societies, powerful enough to affect our feelings, actions, and thoughts. We are turning more and more into vulnerable human beings. Are we all becoming addicted? Many studies have shown that this addiction is real. The COVID-19 pandemic is a bright example. The infodemic that followed increased people’s anxiety, sense of uncertainty and disempowerment and these feelings worsened as human beings are naturally enclined to be in control of their emotions. Therefore, it is crucial that each one of us does a little bit to translate good intentions into actions and comes up with personal solutions to these problems. You might legitimately ask yourselves at this stage how can a single person find appropriate solutions to technology negative effects. Unfortunately, individual responsibility is only one element of the equation, and it needs to be coupled with knowledge/tools resulting in the balanced and conscious use of devices. It is exactly knowledge people are missing.

Let’s go back for a moment to the car: when you drive it, you need to pass a test and get a driving license, but we do not pass a test to use technology! There might be a common feeling that since it is not going to kill us, that might be no use for it. Is it so? We are all aware of the scandals that happened in political elections because of social media influence, and if social people are not killing human beings, they are certainly killing democracy. These are just hypotheses, unfortunately, there is no magic bullet, and the solution is hard to find. Instead, it is a process that requires time, since many are the topics that should be addressed; regulation being the main one regarding human rights, ethics, freedom of speech. More accountability and knowledge is needed, and much more should be done when it comes to people. Critical media literacy is important for everyone, but it is only a partial solution to a big problem.

Looking ahead, we cannot deny that people are quite optimistic about AI in the future and it might be that, as happened to the cars, safety measures will be introduced at some points and people will pay more and more attention to the risks while benefitting at the same time from its responsible use. Let’s invest in thinking about the use of AI, our responsibilities, first as users and then as citizens, and let’s provide spaces for people to have more discussions, more human connections and a healthier online/offline balance.

Are you interested in watching the different sessions of the EAVI Conversations 2021?

Conclusions drawn from the discussion with Lee Hibbard – 9th of November (EAVI Conversations 2021)

Artificial Intelligence is not a simple topic to address, neither its’ meaning nor its implications. That is why, when it comes to its definition, most users are puzzled. No wonder that only 15% of the event participants were confident about what AI entails. Despite its large use, people are not aware of how it works. As Lee Hibbard put it: “If we don’t know what AI is, maybe we don’t know it”. The overall feeling around AI is uncertainty, especially about the consequences this technology has and will have both on individuals and societies. This is even more true if we think about the fact that in this period usually called the digital revolution, technology is developed before any recommendation or safety measures to counteract its impact. However, this is not a new trend. If we went back in time, we could discover that when the first cars were introduced into the market, they didn’t have seat belts. If we think about it today, this doesn’t sound real, although it is: seat belts did not come along until later. Was it a testing phase or were people unaware of the risks? This example clearly illustrates the current circumstances.

This is a trial period for AI. There is no doubt that we do not make decisions based on what we know, and we do not interact with AI considering all the risks. It is a common belief that as technology progresses faster and faster, we all pursue everything that seems to be more efficient and cost-effective, no matter the consequences.

There is a powerful question we tried to address during the session: “Was it better in the old days?”. The truth is that there is no point in going backwards. As ICTs are part of us and serve multiple purposes during our daily lives, we need to look ahead toward the future, especially because it is not anymore a choice whether to use or not technology, social media and devices, but an obligation. Looking back will not solve any of our problems. We should accept that we are where we are and embrace both the challenges and the benefits.

When we question the role AI plays nowadays, we cannot ignore that big companies have a major role to play. They do not seem to have made a formal commitment to respect ethics or human rights. However, to play fairly, we cannot forget that it is in a business’ DNA to make a profit. Tech companies focus on surveillance capitalism that keeps us glued to the screen because at the end of the day this is a winning business model. It is difficult to recognise that maybe corporations do not have a plan to destroy people’s lives; that’s what they do, it’s just people doing what they do. Lee Hibbard raised an interesting point here: “There are no enemies when it comes to technology, it is just a matter of defending us from ourselves.”

One important aspect was highlighted multiple times: individual responsibility. People are aware we are offered free services but do they reflect on the reasons?

Behind social media there are algorithms, and it’s common knowledge that they strive to keep our attention for as long as possible, whether we encounter negative or positive content. They create ties between people and devices that can seem like salvation to some. They help us build online networks resembling our societies, powerful enough to affect our feelings, actions, and thoughts. We are turning more and more into vulnerable human beings. Are we all becoming addicted? Many studies have shown that this addiction is real. The COVID-19 pandemic is a bright example. The infodemic that followed increased people’s anxiety, sense of uncertainty and disempowerment and these feelings worsened as human beings are naturally enclined to be in control of their emotions. Therefore, it is crucial that each one of us does a little bit to translate good intentions into actions and comes up with personal solutions to these problems. You might legitimately ask yourselves at this stage how can a single person find appropriate solutions to technology negative effects. Unfortunately, individual responsibility is only one element of the equation, and it needs to be coupled with knowledge/tools resulting in the balanced and conscious use of devices. It is exactly knowledge people are missing.

Let’s go back for a moment to the car: when you drive it, you need to pass a test and get a driving license, but we do not pass a test to use technology! There might be a common feeling that since it is not going to kill us, that might be no use for it. Is it so? We are all aware of the scandals that happened in political elections because of social media influence, and if social people are not killing human beings, they are certainly killing democracy. These are just hypotheses, unfortunately, there is no magic bullet, and the solution is hard to find. Instead, it is a process that requires time, since many are the topics that should be addressed; regulation being the main one regarding human rights, ethics, freedom of speech. More accountability and knowledge is needed, and much more should be done when it comes to people. Critical media literacy is important for everyone, but it is only a partial solution to a big problem.

Looking ahead, we cannot deny that people are quite optimistic about AI in the future and it might be that, as happened to the cars, safety measures will be introduced at some points and people will pay more and more attention to the risks while benefitting at the same time from its responsible use. Let’s invest in thinking about the use of AI, our responsibilities, first as users and then as citizens, and let’s provide spaces for people to have more discussions, more human connections and a healthier online/offline balance.