Deceptive rolls filled with disinformation, fake accounts, trolls, and an unhealthy secret algorithm ingredient by big-tech. Clickbait dipping sauce comes for free. Sounds too good to be true? Probably is…

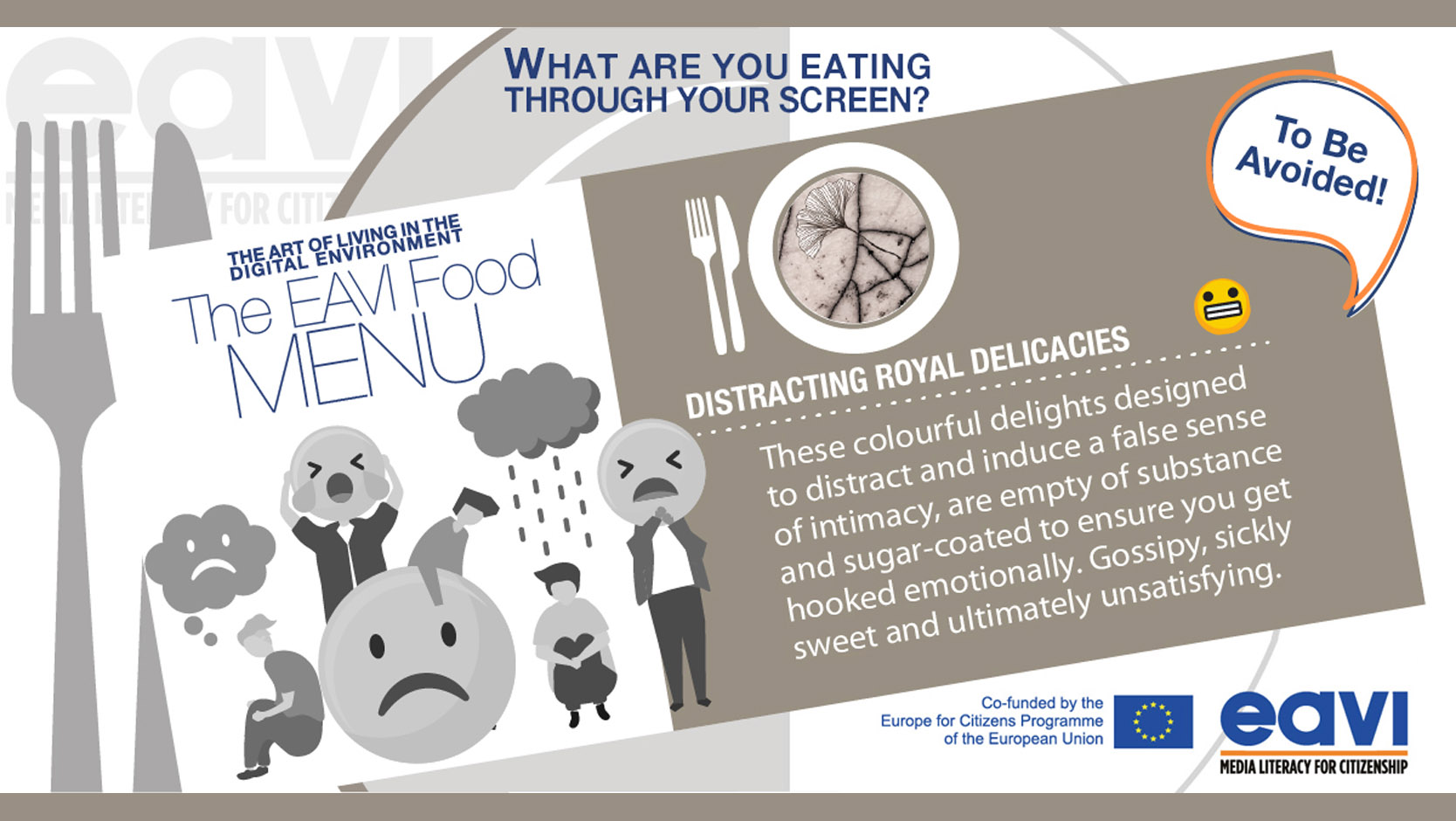

When was the last time that you saw the news online that really got you mad, excited, agitated? Did you check whether this was a trustworthy piece of information or did you just take that for granted? Clickbaity and sensational information and pictures easily attract our attention and are spread rapidly in social networks – after all, made-up stories are often more glamorous and shocking than real news.

But unfortunately, this can become dangerous very quickly, especially as fake accounts deliberately spread false information to disrupt social cohesion and trolls stir the pot and further fuel existing conflicts. All this puts our democracies at risk as real-life decision-making processes like votes and the acceptance of safety precautions, for example, Covid-related measures, are determined by the information we consume.

But how can we tell what is real from what is fake? How can we shield ourselves against those who try to get strong reactions out of us for fun or to sway public opinion? Amy Affelt1 gives some suggestions on what to look out for to detect fake and manipulative information:

Unfortunately, aside from “just” fake news, which can be discarded with some effort, and even more dangerous kind of false information is increasingly spreading across the internet: so-called deep fakes. Intelligent algorithms are able to create false images, videos, and audio files that are almost indiscernible from real footage. For this, a video of a source person is used, along with unrelated audio, image, and video footage of a target person2. Deepfake technology is then used to merge the facial features and voice of the target person onto the source person’s video. This way, for example, a false video of Elon Musk smoking a joint is created or the voice of a CEO is faked to scam a company out of large sums of money.

The first deepfake video was published in 2017: the face of a celebrity was swapped onto a porn actor. This trend persisted: in 2019, 15.000 deep fakes were found by the AI firm Deeptrace, with 96% of them being pornographic3. In the beginning, the deepfake technology still required a lot of data from the person to be faked, so celebrities and people in the public eye were the first obvious targets. But since then, the technology has become a lot more accessible, and as little as a still image is needed to create a deep fake video of someone today.

This is highly problematic because deep fakes cannot only disrupt society and politics as national or organizational leaders are faked to insult each other, utter threats, or do publicly unacceptable things. But at the same time, the mere existence of deep fakes erodes people’s trust in the media: if even videos, often seen as solid pieces of evidence, can be faked to perfection, what can we still put our trust in? And finally, on the flip side, people can claim that pretty much anything they said or did never happened but was just a deep fake – reality itself becomes debatable.

While cheaply produced deep fakes can sometimes be spotted by paying close attention to bad lip-synching, poorly rendered details like hair, rough edges around patched faces, or mismatches in skin color, the systematic detection of deep fakes can only be realized with more AI. Constant efforts are made to accelerate research and advancements in this field, but such attempts always fuel an arms race, because as deep fake detection algorithms evolve, so does deep fake technology. An approach that could offer hope and a better solution is blockchain technology, which, put simply, creates a chain of unchangeable metadata blocks that allow the identification of a video’s or image’s origin.

Additional Links

Are you in the mood for a challenge? Try this fun online game that helps you learn how to spot internet trolls. Also, here’s some tips for how to deal with trolls when you encounter them.

Not sure if the latest news you found online are fake? The Google Fact Check Explorer helps you find out if any fact-checkers around the world have deemed your information false or fishy. For more information about how to spot fake news yourself, have a look at our infographic Beyond Fake News and our fake news detection game: Beyond the Headlines.

And finally, some more information about deep fakes and how blockchain could help fight them:

Can blockchain block fake news and deep fakes?

The Blockchain solution to our Deepfake Problems

Research Resources

1: Affelt, A. (2019). How to Spot Fake News. All That’s Not Fit to Print, pp. 57–84. doi: 10.1108/978-1-78973-361-720191005

2: Nguyen, T. T.; Nguyen, C. M.; Nguyen, D. T.; & Nguyen, D. T. Nahavandi, S. (2019). Deep Learning for Deepfakes Creation and Detection: A Survey. Retrieved from: https://arxiv.org/abs/1909.11573

3: Sample, I. (2020). What are deepfakes – and how can you spot them? Retrieved from: https://www.theguardian.com/technology/2020/jan/13/what-are-deepfakes-and-how-can-you-spot-them [Accessed: 31.03.21]